General information

Feature Selection is the process of reducing the number of input variables. There are three strategies to perform feature selection: correlation analysis, variance inflation factor, and recursive feature elimination.

- Correlation Analysis

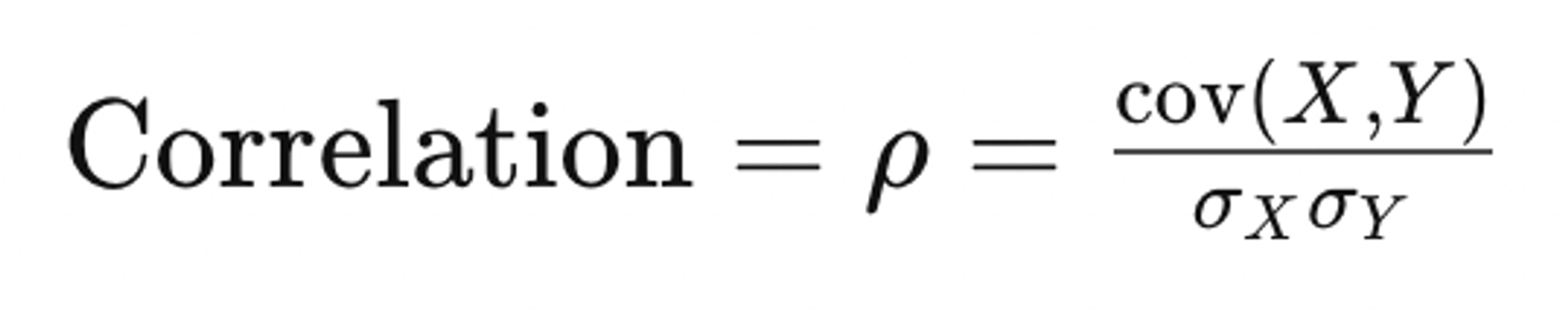

Correlation analysis is used to measure the strength of the linear relationship between two variables and compute their association. It calculates the level of change in one variable due to the change in the other. A low correlation points to a weak relationship between the two variables, on the other hand, a strong correlation means that the variables are strongly related.

We calculate the Pearson correlation coefficient between all features presented in the input using the formula below.

So we take pairs of presented features(columns) and calculate the correlation between all of them, then we use a threshold to drop features that have a value of correlation more than our threshold that will remove features.

- Variance Inflation Factor

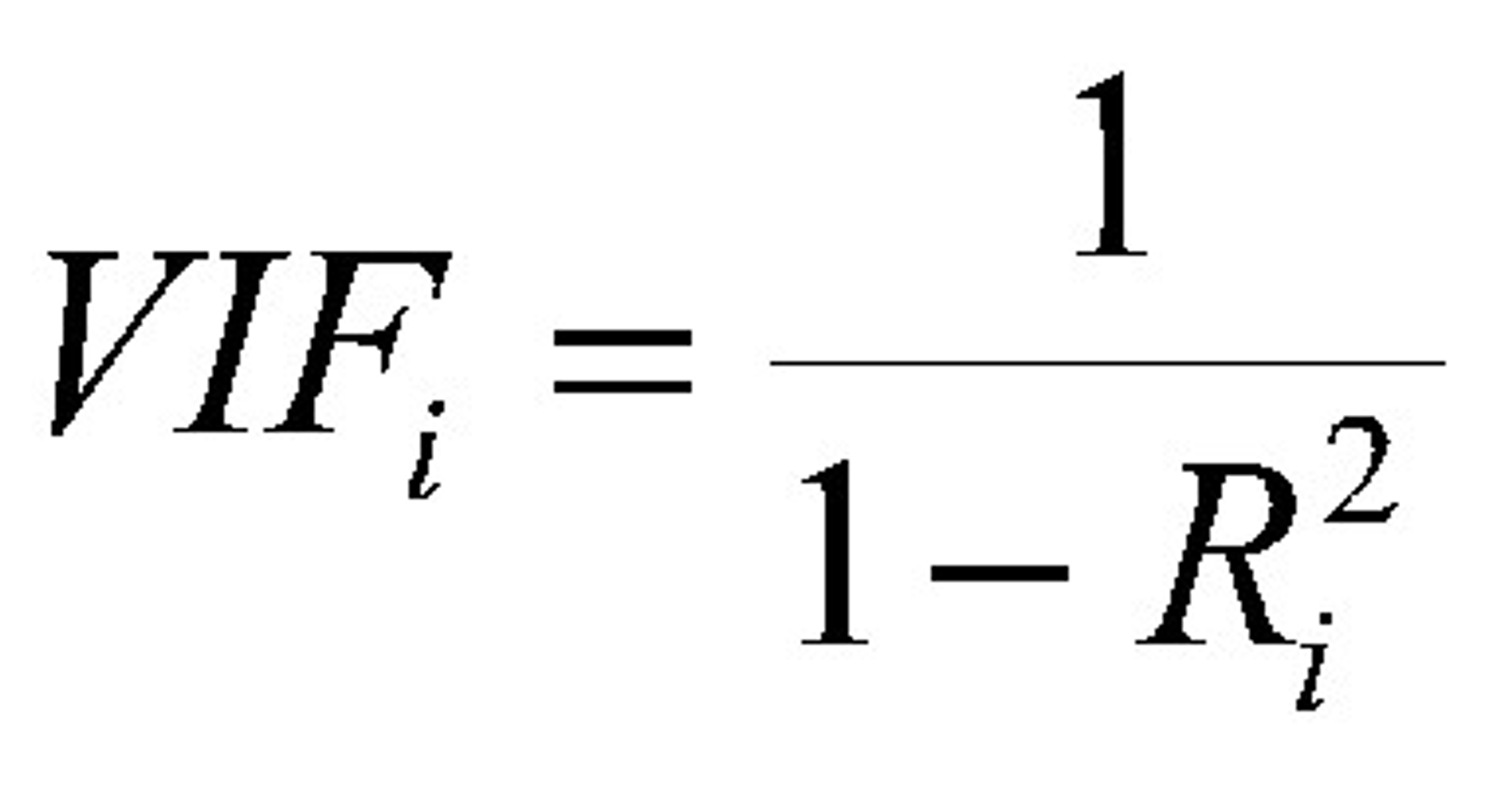

A variance inflation factor(VIF) detects multicollinearity in regression analysis. Multicollinearity is when there’s an intercorrelation among several predictors in a multiple regression model and can significantly impact the regression results. The VIF estimates how much the variance of a regression coefficient is inflated due to multicollinearity in the model. One recommendation is that if VIF is greater than 5, then the explanatory variable given by feature(column) is highly collinear with the other explanatory variables, and the parameter estimates will have large standard errors because of this.

Formula for calculating VIF is presented below:

It reflects all other factors that influence the uncertainty in the coefficient estimates. The VIF equals 1 when the vector is orthogonal to each column of the design matrix for the regression of on the other covariates. By contrast, the VIF is greater than 1 when the vector is not orthogonal to all columns of the design matrix for the regression of on the other covariates. Finally, note that the VIF is invariant to the scaling of the variables (that is, we could scale each variable by a constant without changing the VIF).

- Recursive Feature Elimination

Given an external estimator that assigns weights to features, recursive feature elimination (RFE) aims to select features by recursively considering smaller and smaller sets of features. First, the estimator is trained on the initial set of features in our linear or logistic model. The importance of each feature is obtained either through any specific attribute or callable. Then, the least important features are pruned from the current set of features. That procedure is recursively repeated on the pruned set until the desired number of selected features is eventually reached.

As an estimator, we use a logistic regression model when types of target column are int or boolean, and the unique values of the target column are less than 100; otherwise, we use a linear regression model.

Brick provides a possibility to choose the number of features you want in the output.

Description

Brick Location

Bricks → Transformation → Feature Selection

Brick Parameters

- Method

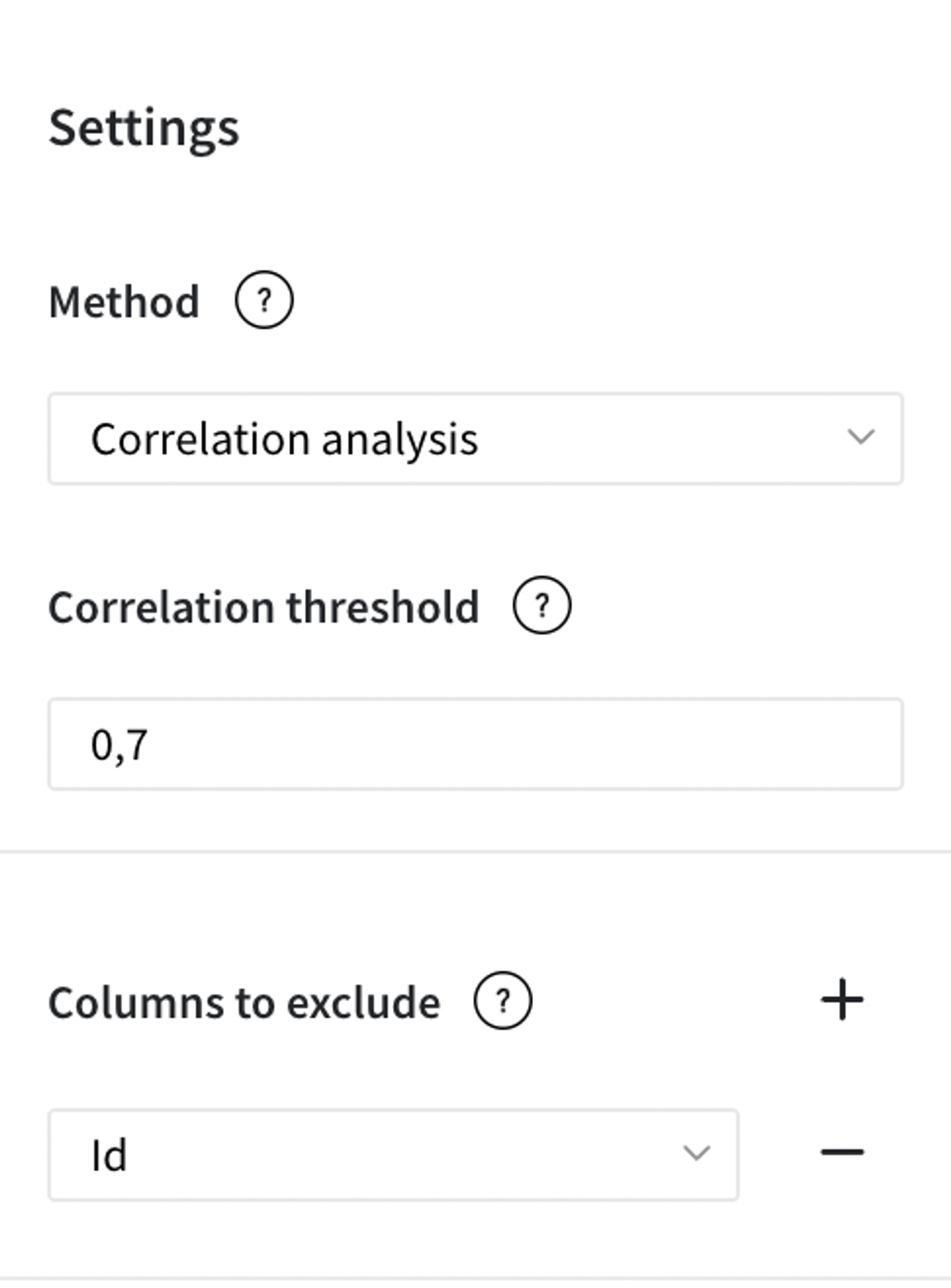

There are three methods available to choose between: correlation analysis, variance inflation factor, recursive feature elimination. Each of them you can read a brief description above.

- Correlation threshold

Parameter is available when correlation analysis option picked in method parametr. You can pick value between 0.7 and 1. When value approaches to 0.7 more features will be eliminated.

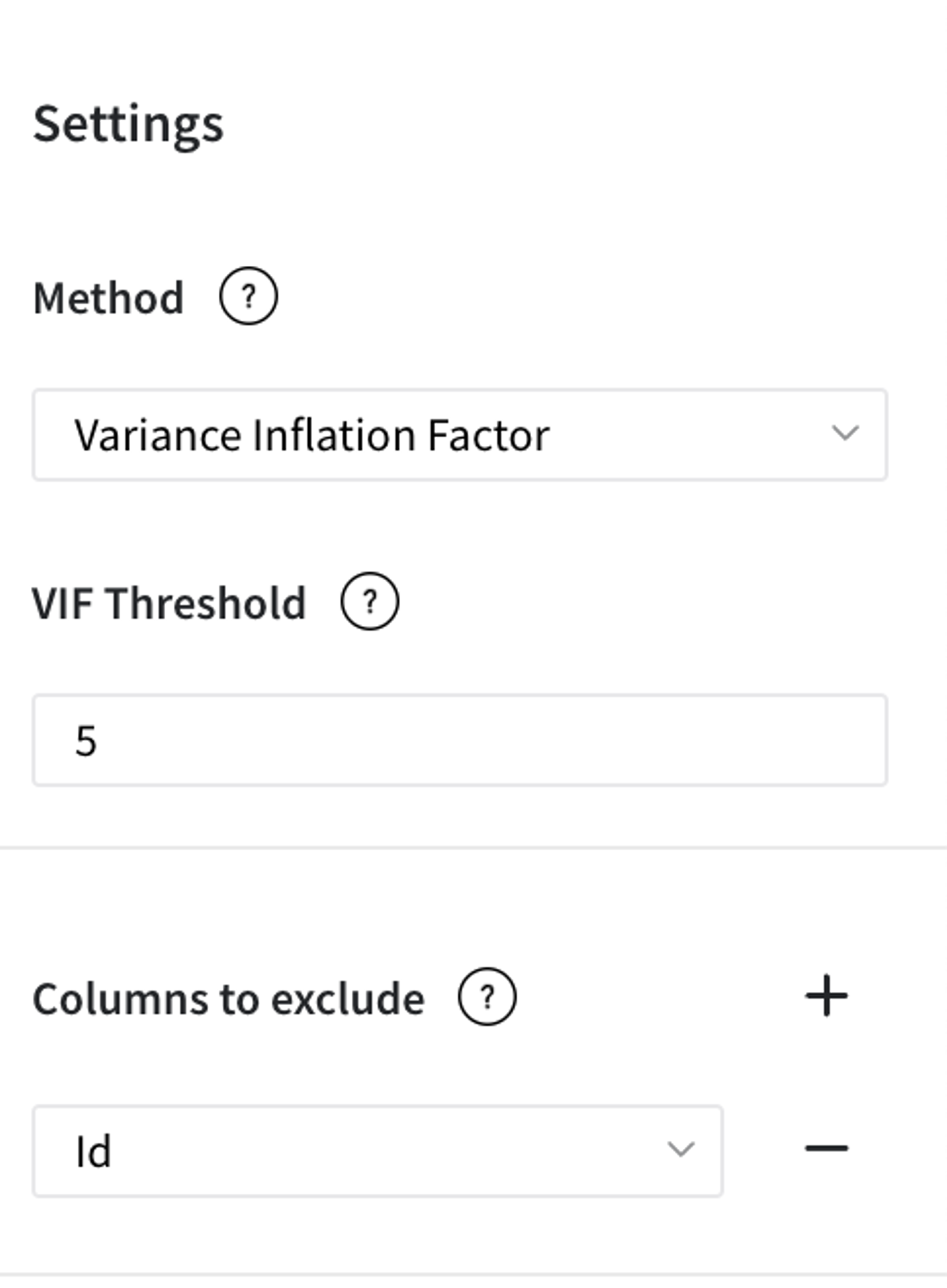

- Columns to exclude

You can choose some columns to exclude from brick performance, so that no actions will be performed on them in current brick.

- VIF threshold

Parameter is available when variance inflation factor option picked in method parametr. You can pick value between 5 and 10. When value approaches to 5 more features will be eliminated.

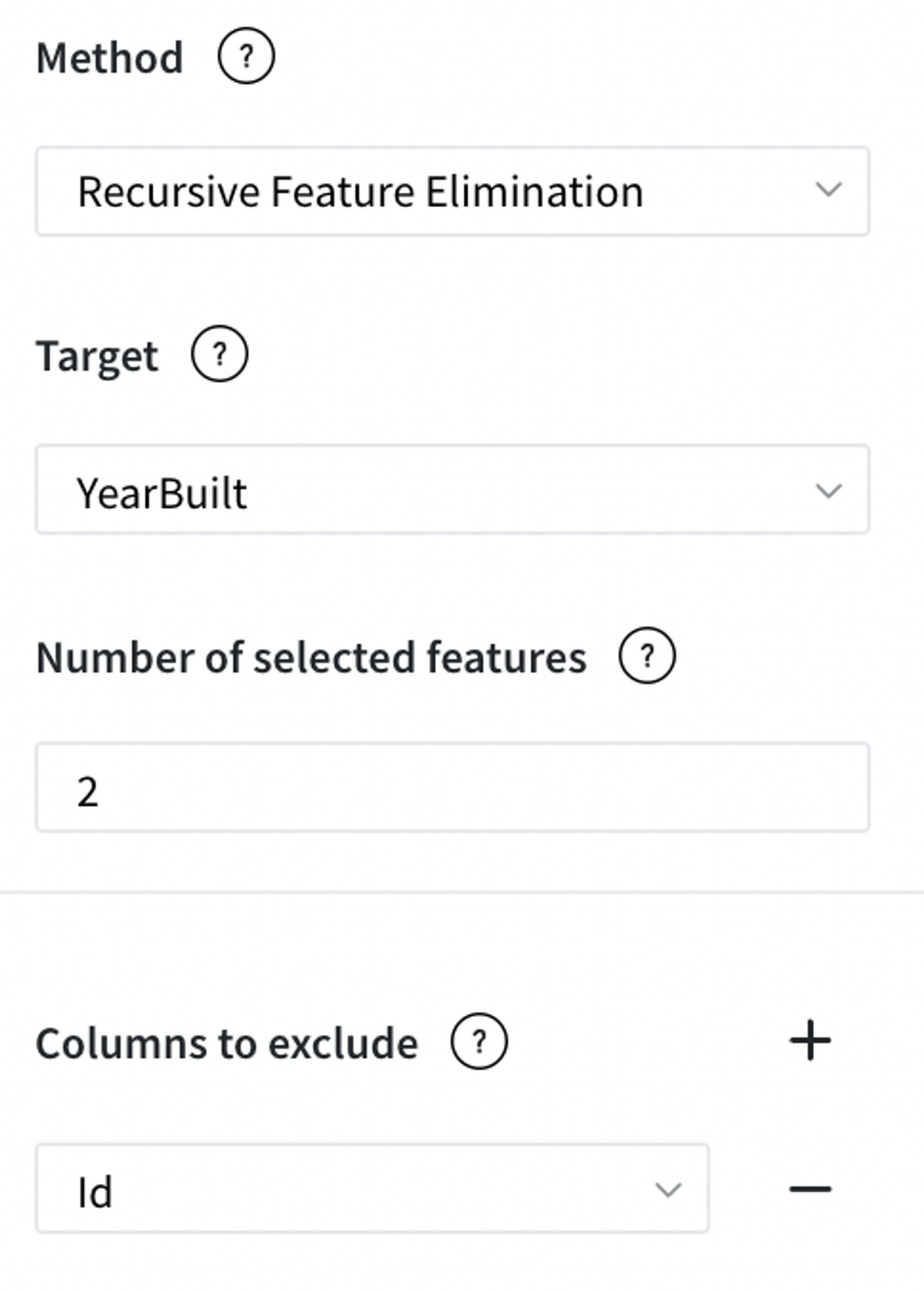

- Target

Parameter is available when recursive feature elimination option picked in method parametr. You need to choose one column from input to be target column that will be used in logistic or linear model.

- Number of selected features

- Inputs

- Outputs

- New dataset with same or less number of columns

- Transformer

Parameter is available when recursive feature elimination option picked in method parametr. The minimal number of features presented in the output. Value must not be 0.

Brick Inputs/Outputs

Brick takes the dataset.

Example of Usage

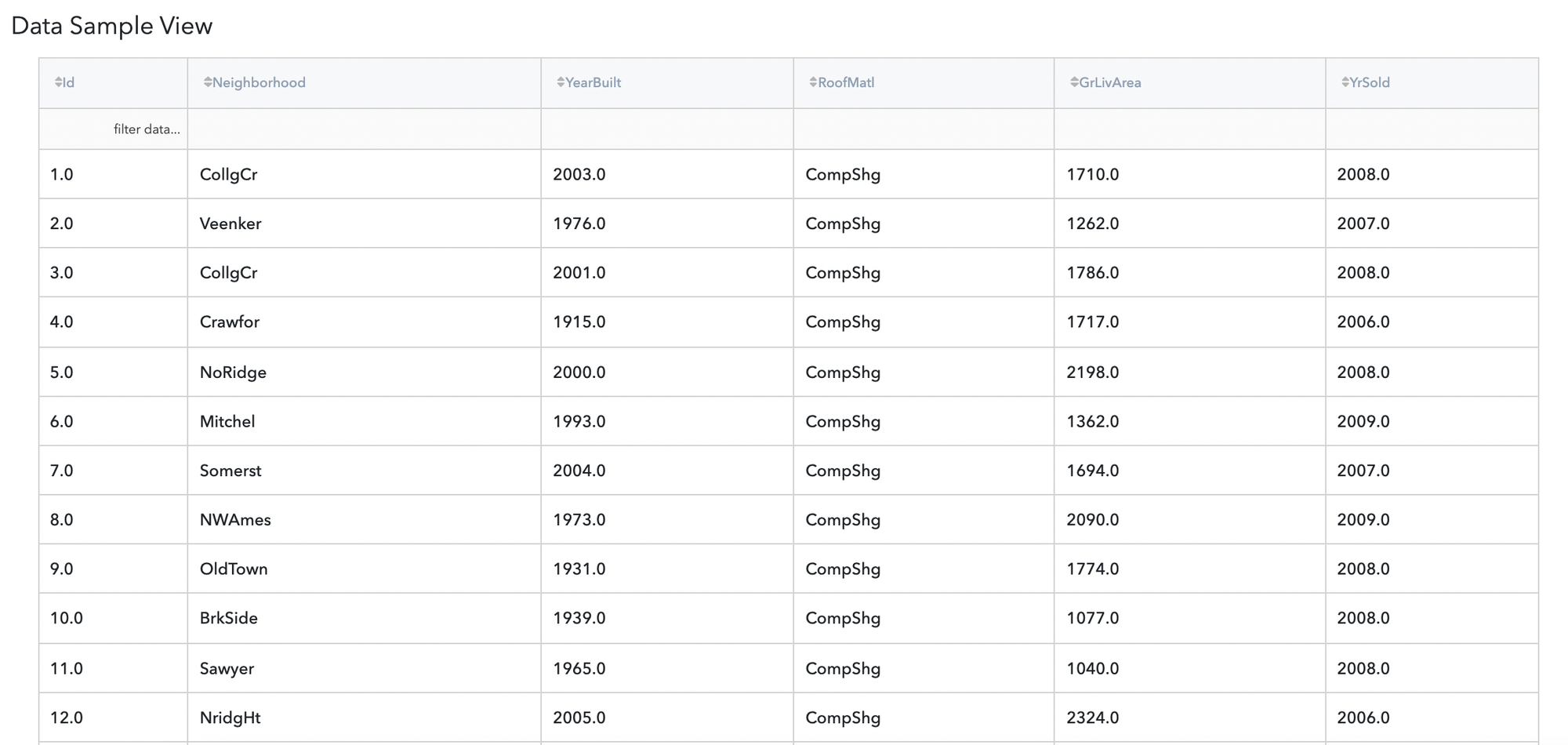

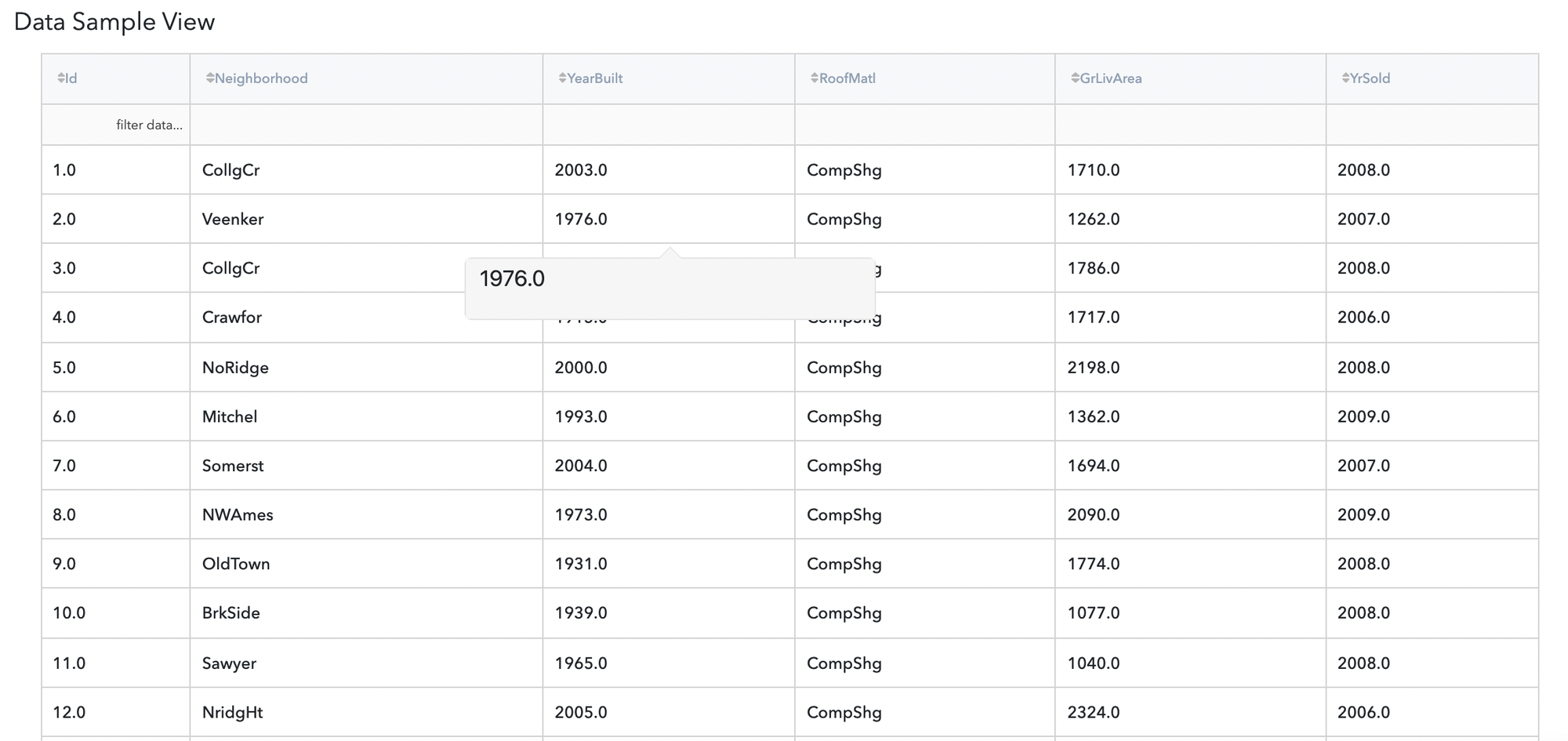

Let’s assume we have data about house prices dataset. It consists of many columns, they are: id, Neighbourhood, YearBuilt, RoofMatl, GrLivArea, YrSold, SalePrice.

First we will try first method - correlation analysis with parameters listed below.

Output of the brick will be next:

As we can see the column SalePrice is eliminated, so we have only 6 column in output. There is also a possibility to save this transformer for future data.

Now let’s look at Variance Inflation Factor method with parameters listed below:

Result of brick performance will be next:

As you can see this method haven’t cropped anything that because the nature of method differs from correlation analysis. Let’s move to the third method.

Third method will be Recursive Feature Elimination with parameters listed below:

Result is presented below: