General Information

This brick provides you an easy interface for creating your own out-of-box and distributed gradient boosted decision tree for your binary classification tasks. Due to its leaf-wise processing nature, the created model can be easily trained on large datasets, while giving formidable results.

The models are built on three important principles:

- Weak learners

- Gradient Optimization

- Boosting Technique

In this case, the weak learners are multiple sequential specialized decision trees, which do the following things:

- the first tree learns how to fit to the target variable

- the second one learns how to fit the difference between the predictions of the first tree and the ground truth (real data)

- The next tree learns how to fit the residuals of the second tree and so on.

All those trees are trained by propagating the gradients of errors throughout the system.

The main drawback of the LGBM Binary is that finding the best split points in each tree node is both a time-consuming and memory-consuming operation.

Description

Brick Location

Bricks → Analytics → Data Mining / AI → Classification Models → LGBM Binary

Brick Parameters

- Learning rate

Boosting learning rate. This parameter controls how quickly or slowly the algorithm will learn a problem. Generally, a bigger learning rate will allow a model to learn faster while a smaller learning rate will lead to a more optimal outcome.

- Number of iterations

A number of boosting iterations. This parameter is recommended to be set inversely to the learning rate selected (decrease one while increasing second).

- Number of leaves

The main parameter to control model complexity. Higher values should increase accuracy but might lead to overfitting.

- Prediction mode

- Class - get predictions as a single value of the 'closest' target class for each data point. Will create only one column with the "predicted_" prefix

- Probability of class - get the numerical probability of each class in a separate column.

This parameter specifies the model's prediction format of the target variable:

- Target Variable

The column that has the values you are trying to predict. Note that the column must contain exactly two unique values and no missing values, a corresponding error message will be given if done otherwise.

- Optimize

This checkbox enables the bayesian hyperparameter optimization, which tweaks the learning rate, as well as the number of iterations and leaves, to find the best model's configuration in terms of metrics.

Be aware that this process is time-consuming.

- Filter Columns

If you have columns in your data that need to be ignored (but not removed from the data set) during the training process (and later during the predictions), you should specify them in this parameter. To select multiple columns, click the '+' button in the brick settings.

In addition, you can ignore all columns except the ones you specified by enabling the "Remove all except selected" option. This may be useful if you have a large number of columns while the model should be trained just on some of them.

- Brick frozen

This parameter enables the frozen run for this brick. It means that the trained model will be saved and will skip the training process during future runs, which may be useful after pipeline deployment.

This option appears only after successful regular run.

Note that frozen run will not be executed if the data structure is changed.

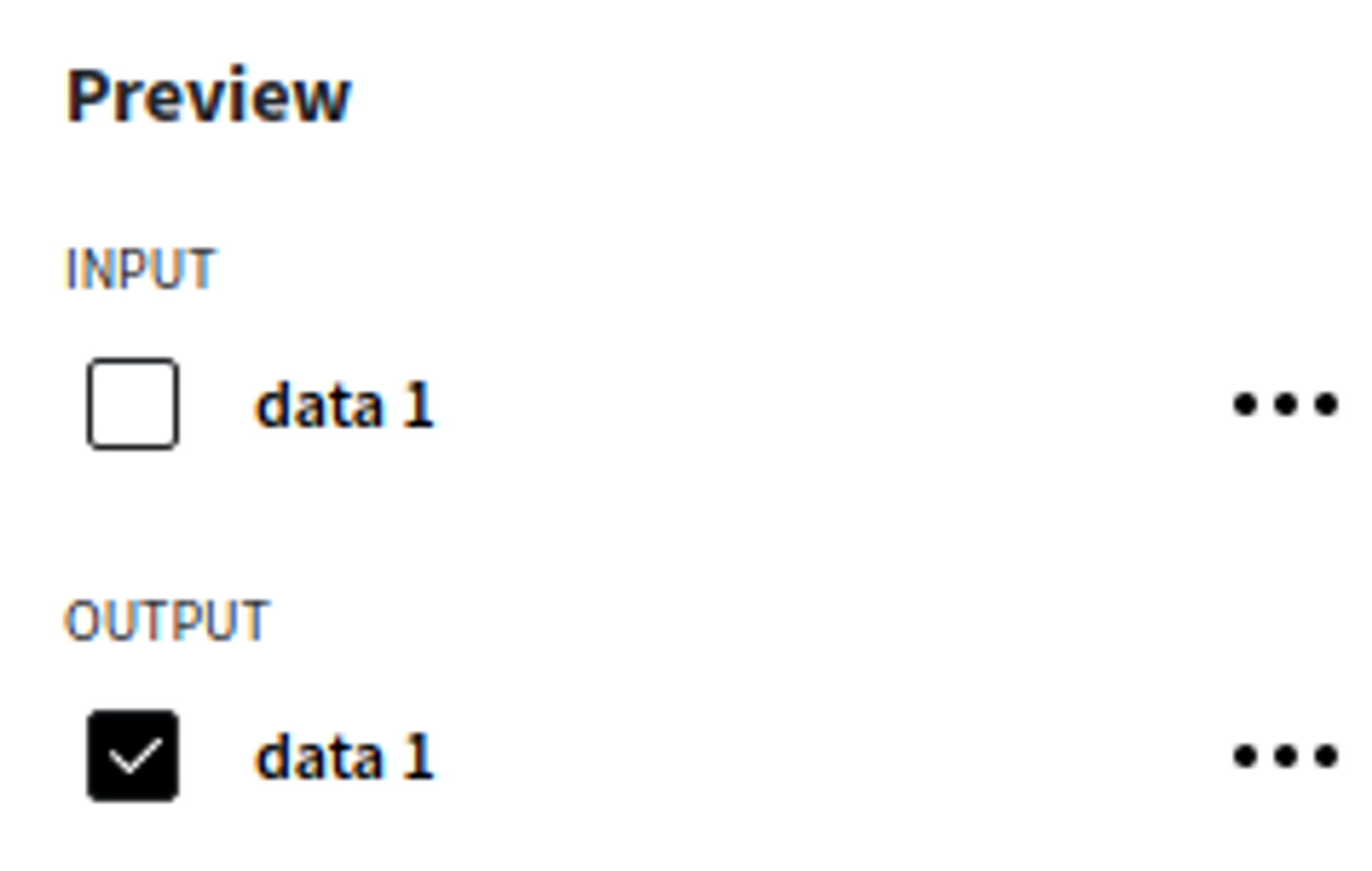

Brick Inputs/Outputs

- Inputs

Brick takes the data set with a target column that has exactly two unique values.

- Outputs

- Data - modified input data set with added columns for predicted classes or classes' probability

- Model - trained model that can be used in other bricks as an input

Brick produces two outputs as the result:

Additional Features

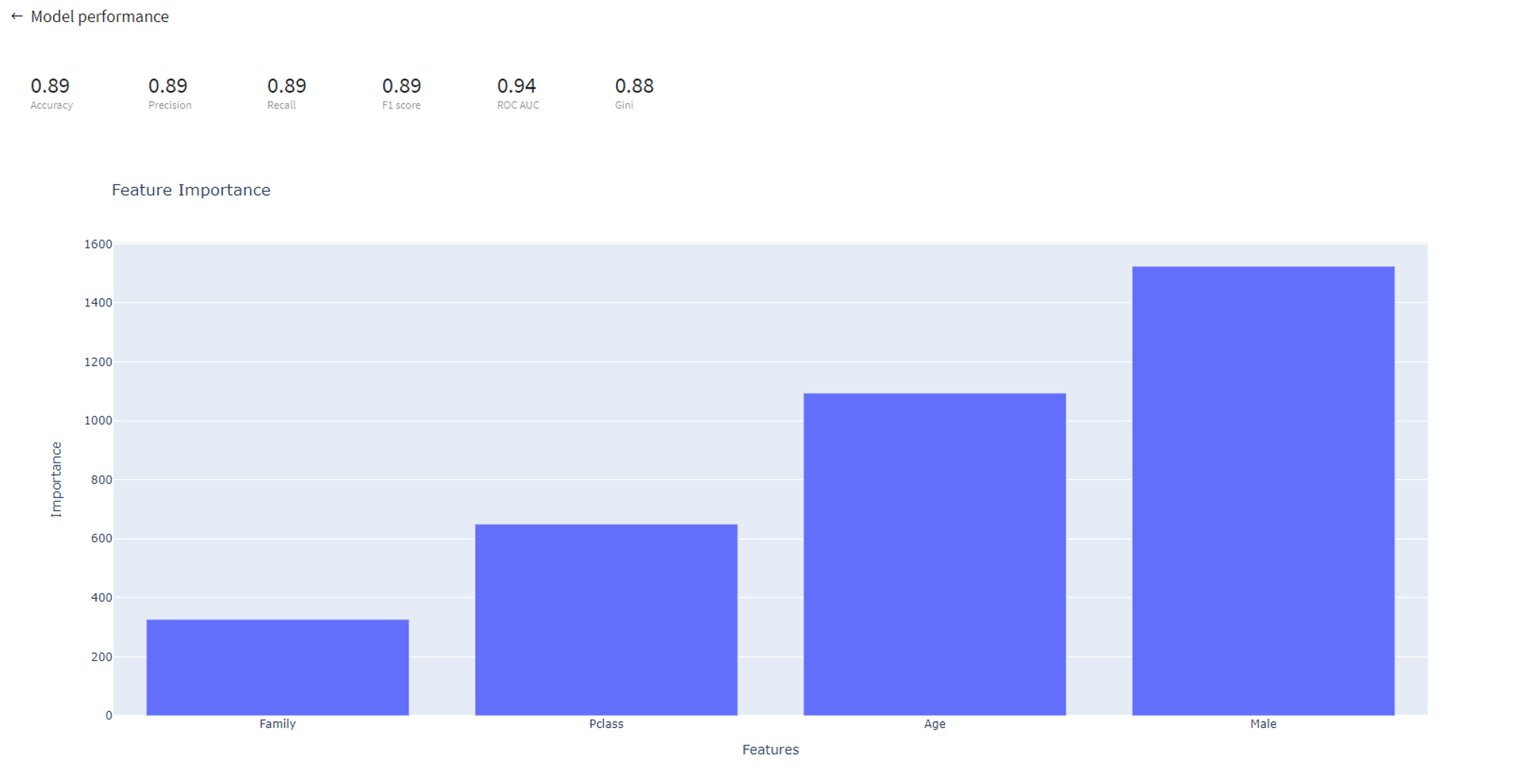

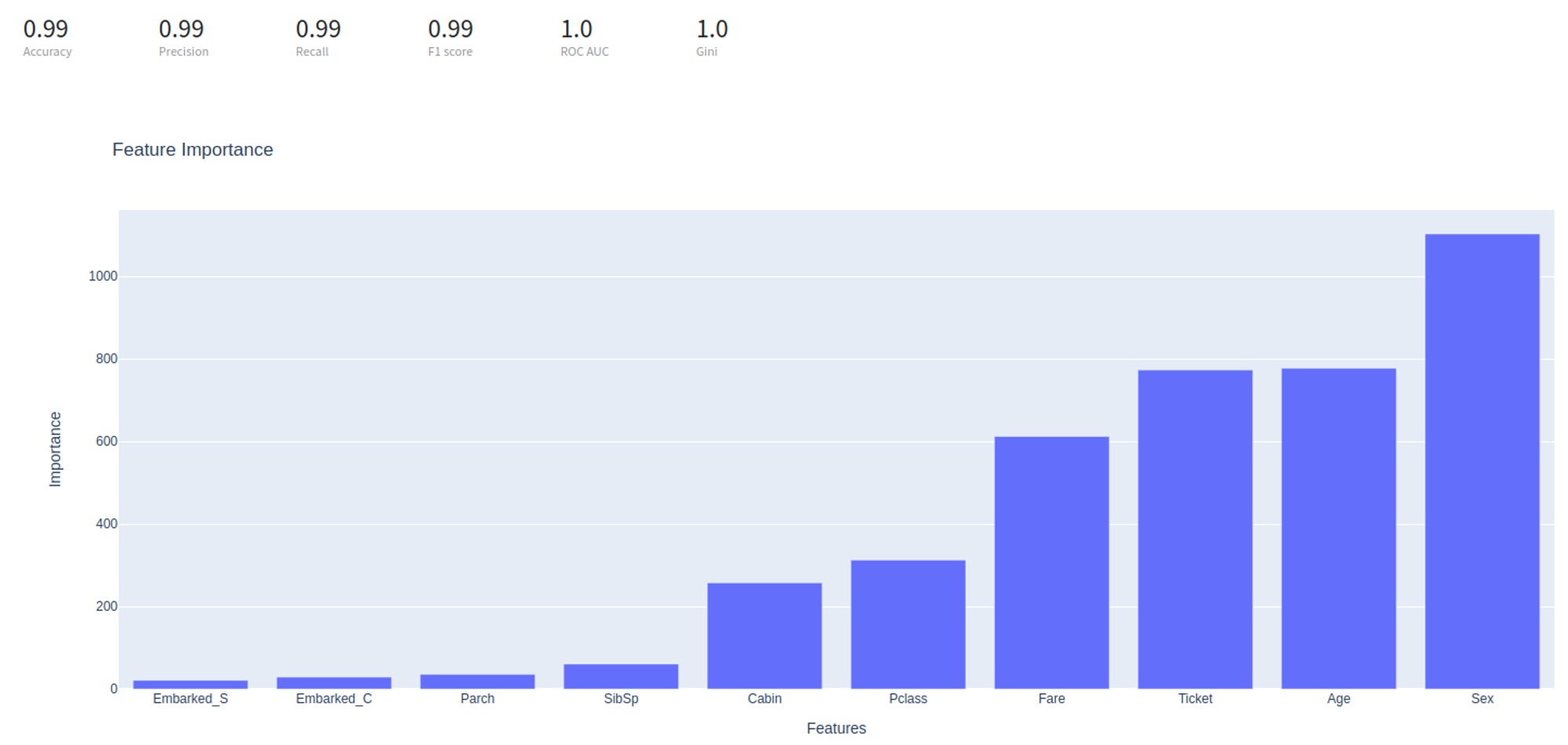

- Model Performance

This button (located in the 'Deployment' section) gives you a possibility to check the model's performance (a.k.a. metrics) to then adjust your pipeline if needed.

Supported metrics: accuracy, precision, recall, f1-score, ROC AUC, Gini.

In addition, feature importance graph is provided in the dashboard.

- Save model asset

This option provides a mechanism to save your trained models to use them in other projects. For this, you will need to specify the model's name or you can create a new version of an already existing model (you will need to specify the new version's name).

- Download model asset

Use this feature, if you want to download model's asset to use it outside Datrics platform.

Example of usage

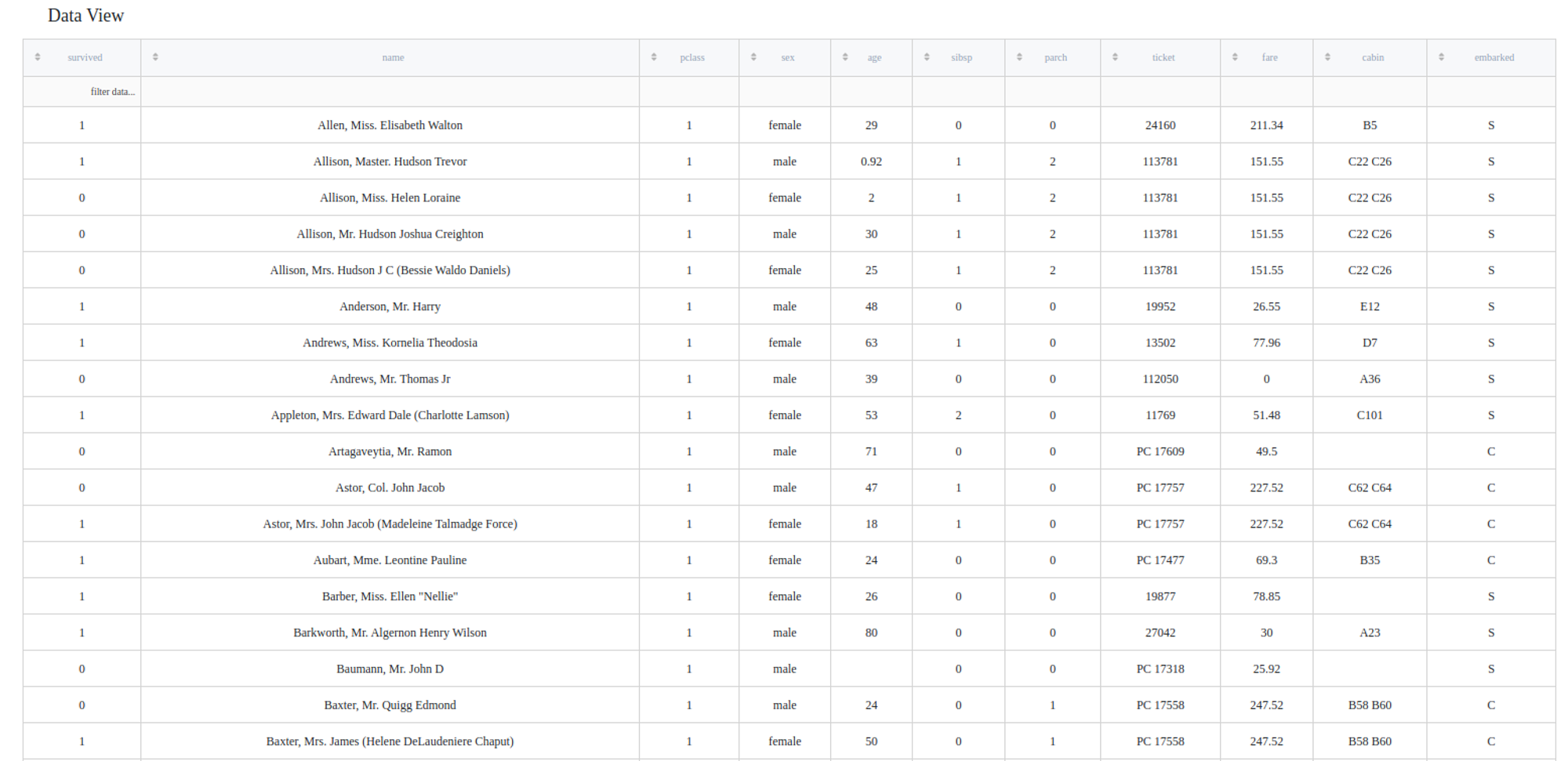

Let's consider the binary classification problem :

The inverse target variable takes two values - survived (0) - good or non-event case / not-survived (1) - bad or event case. The general information about predictors is represented below:

- passengerid (category/int) - ID of passenger

- name (category/string) - Passenger's name

- pclass (category/int) - Ticket class

- sex (category/string) - Gender

- age (numeric) - Age in years

- sibsp (numeric) - Number of siblings / spouses aboard the Titanic

- parch (category/int) - Number of parents / children aboard the Titanic

- ticket (category/string) - Ticket number (contains letters)

- fare (numeric) - Passenger fare

- cabin (category/string) - Cabin number (contains letters)

- embarked (category/string) - Port of Embarkation

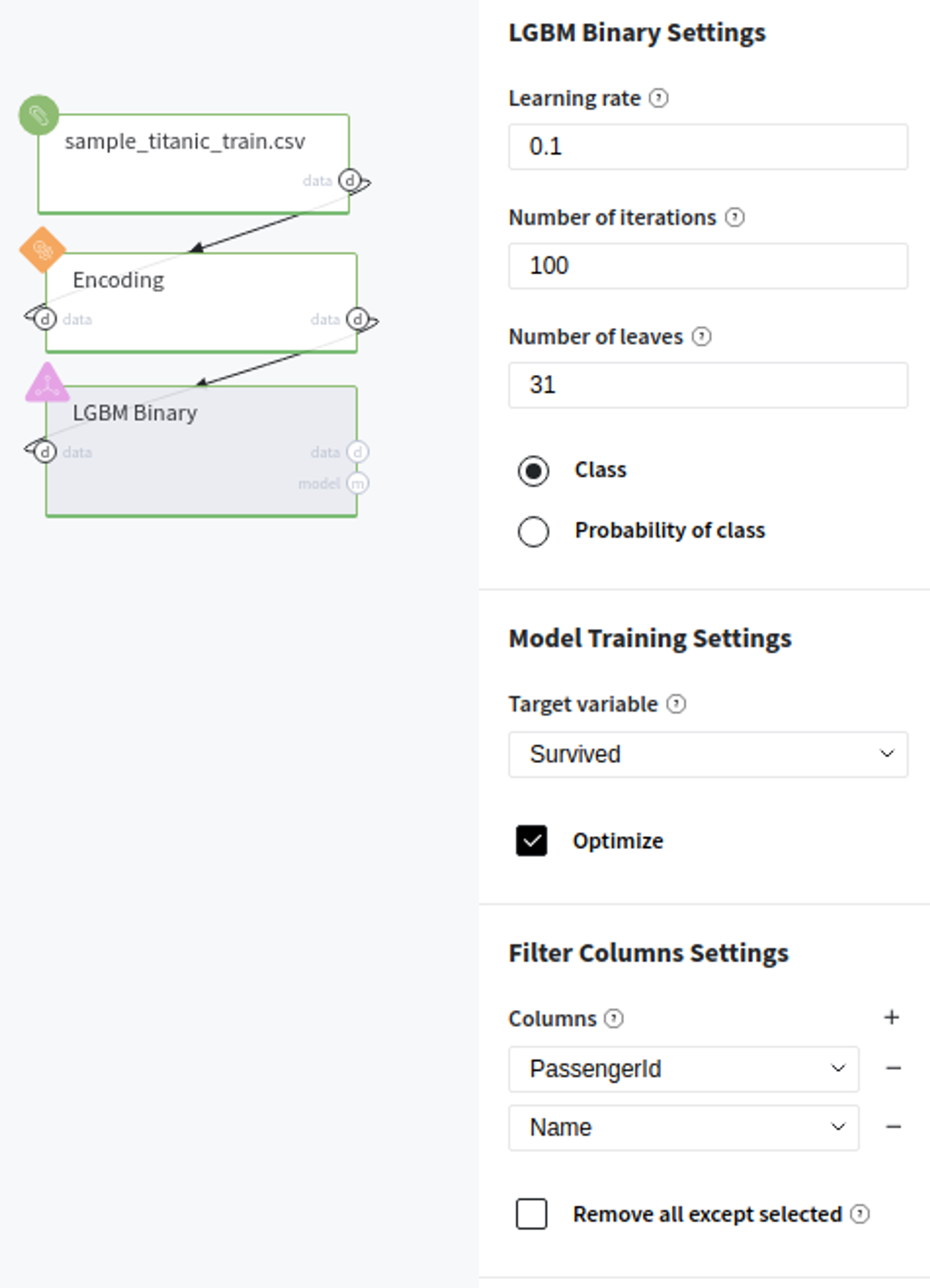

Some of the data columns are strings, which is not a supported data format for LGBM Binary classifier. While the name column can be discarded, other ones contain some useful information, so we will need to encode them the next way:

- embarked - one-hot

- ticket, cabin - label

- sex - binary

In addition, passengerId and name do not contain useful information, so they will be filtered out.

Executing regular pipeline

Next steps would be made to build simple test pipeline:

- First, drag'n'drop titanic.csv file from Storage→Samples folder, Encoding brick from Bricks →Data Preprocessing, as well as LBGM Binary from Bricks → ML

- Connect the data set to Encoding brick, set it up accordingly to the previous section and then connect to the LGBM Binary model

- Select LGBM Binary, choose either Class or Probability of class mode, specify the target variable (Survived column) and columns to filter (you can add several of them by pressing the '+' symbol)

- Check the Optimize option if you like

- Run pipeline

In order to see the processed data with new columns, you should open the Output data previewer on the right sidebar.

The results are depicted in the table:

To see the model's performance just choose the corresponding option in the Deployment section:

Executing frozen pipeline run:

After completing the previous steps you need to check the 'Brick frozen' options in the 'General Settings' section:

This ensures that every time we execute our pipeline, the LBGM Binary brick will load already trained during previous runs model, which is may be useful during deployement. Please note, that brick will raise an error if the data structure will be changed.