General information

Classes weights can be used in order to balance the dataset. They give all the classes equal importance on gradient updates when model is training regardless of how many samples we have from each class in the training data. This prevents models from predicting the more frequent class more often just because it’s more common.

Description

Brick Locations

Bricks → Machine Learning → Classes Weighting

Brick Parameters

- Target variable

The column which contains target variable of classes.

- Weights adjustment

In order to activate it, run the pipeline.

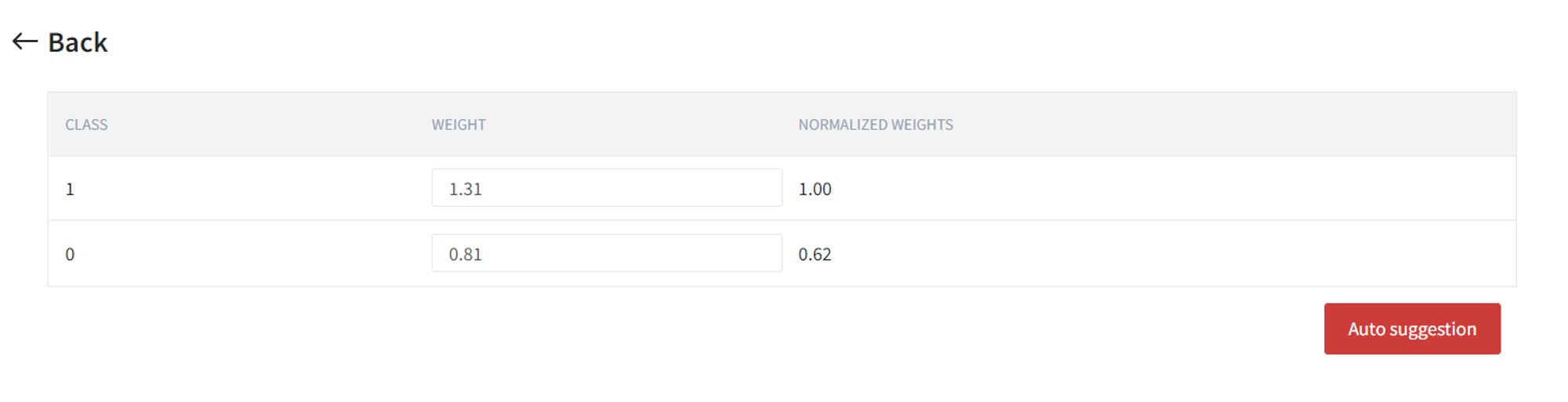

There you can enter the weights or use ‘Auto Suggestion’ to do it in the optimized way.

Brick Inputs/Outputs

- Inputs

Brick takes the classified dataset.

- Outputs

Brick produces the dataset with a weighted column.

Example of usage

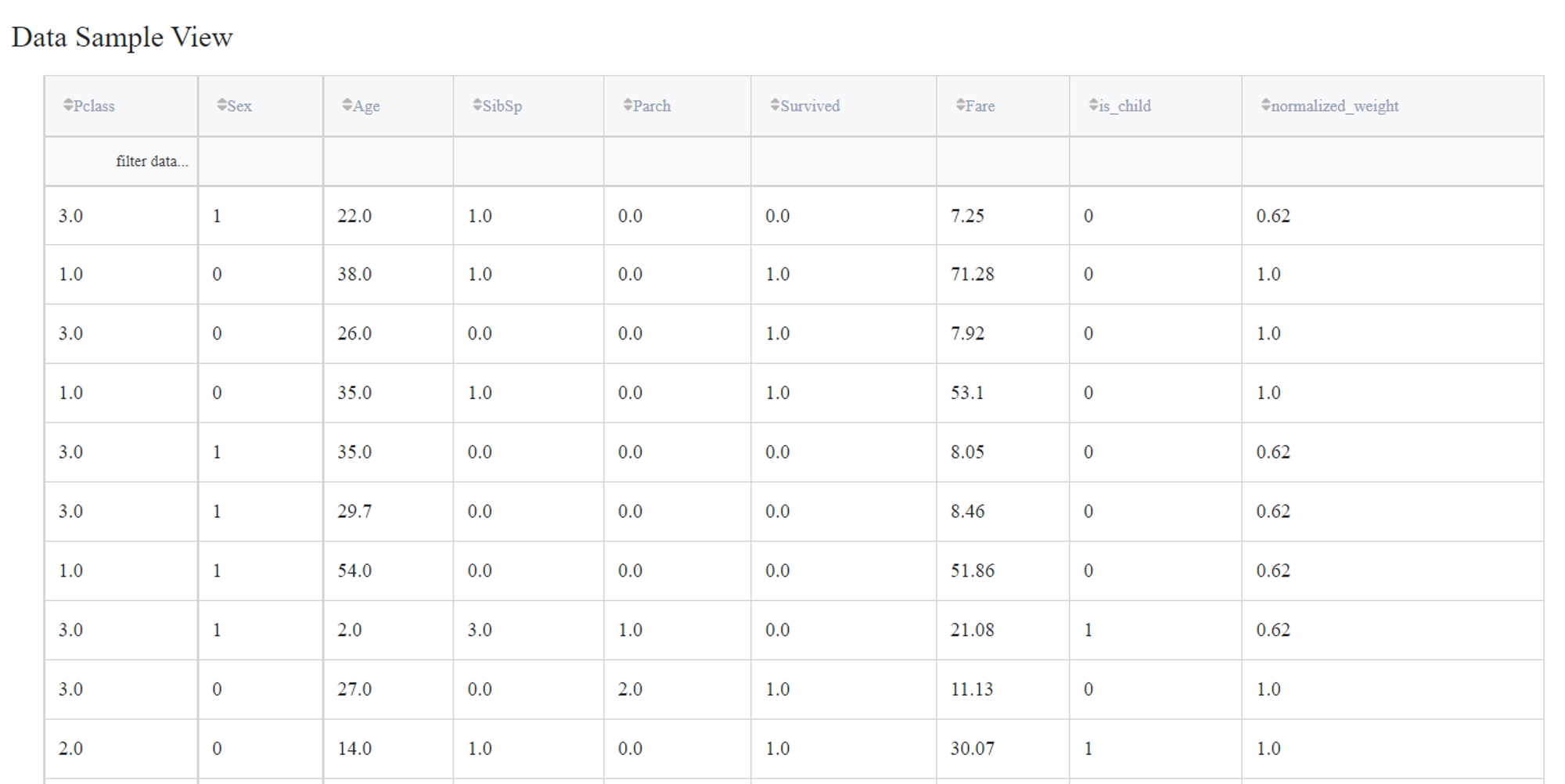

Let's consider the binary classification problem Binary classification : Titanic

The dataset is unbalanced since Survived column contains less zero than one. That is why it may be useful to do classes weighting before using machine learning for classification.

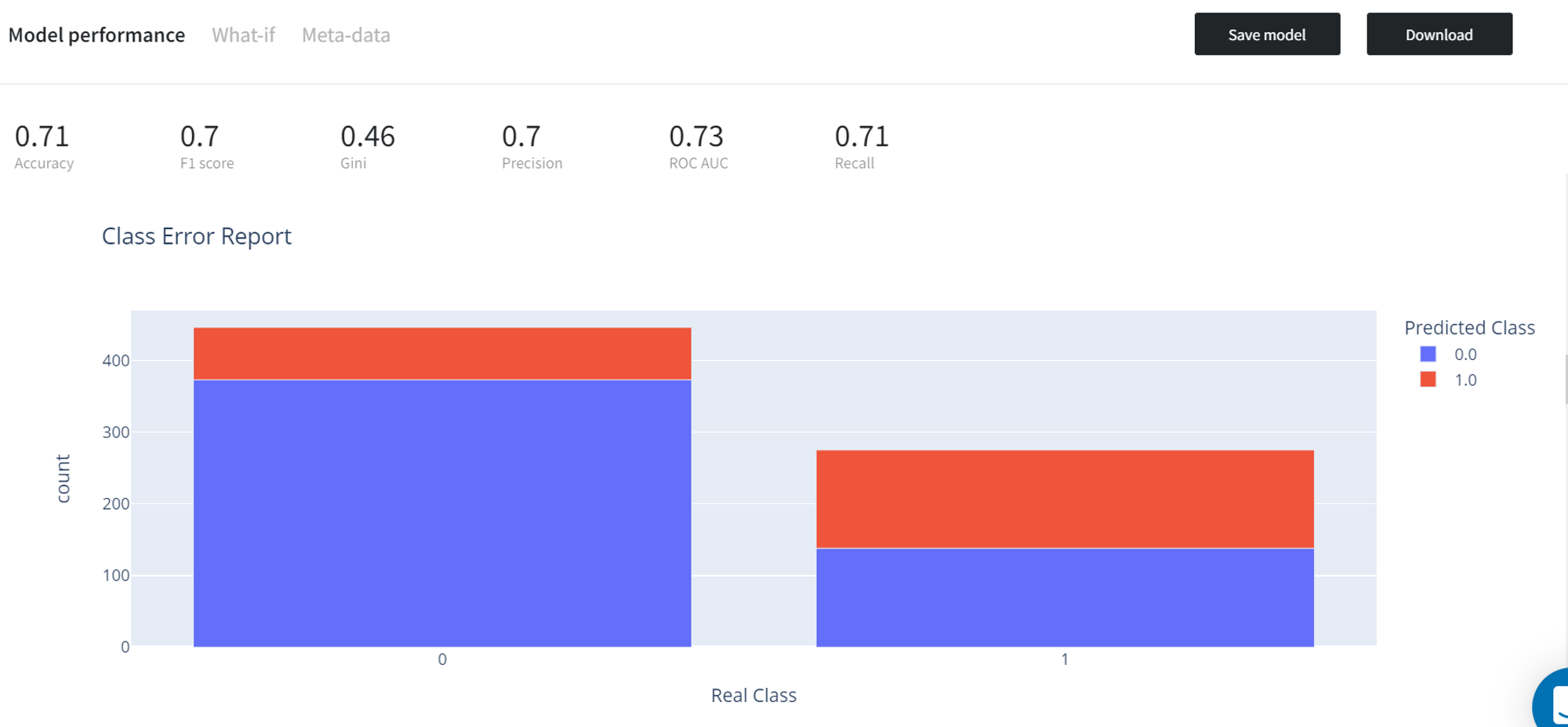

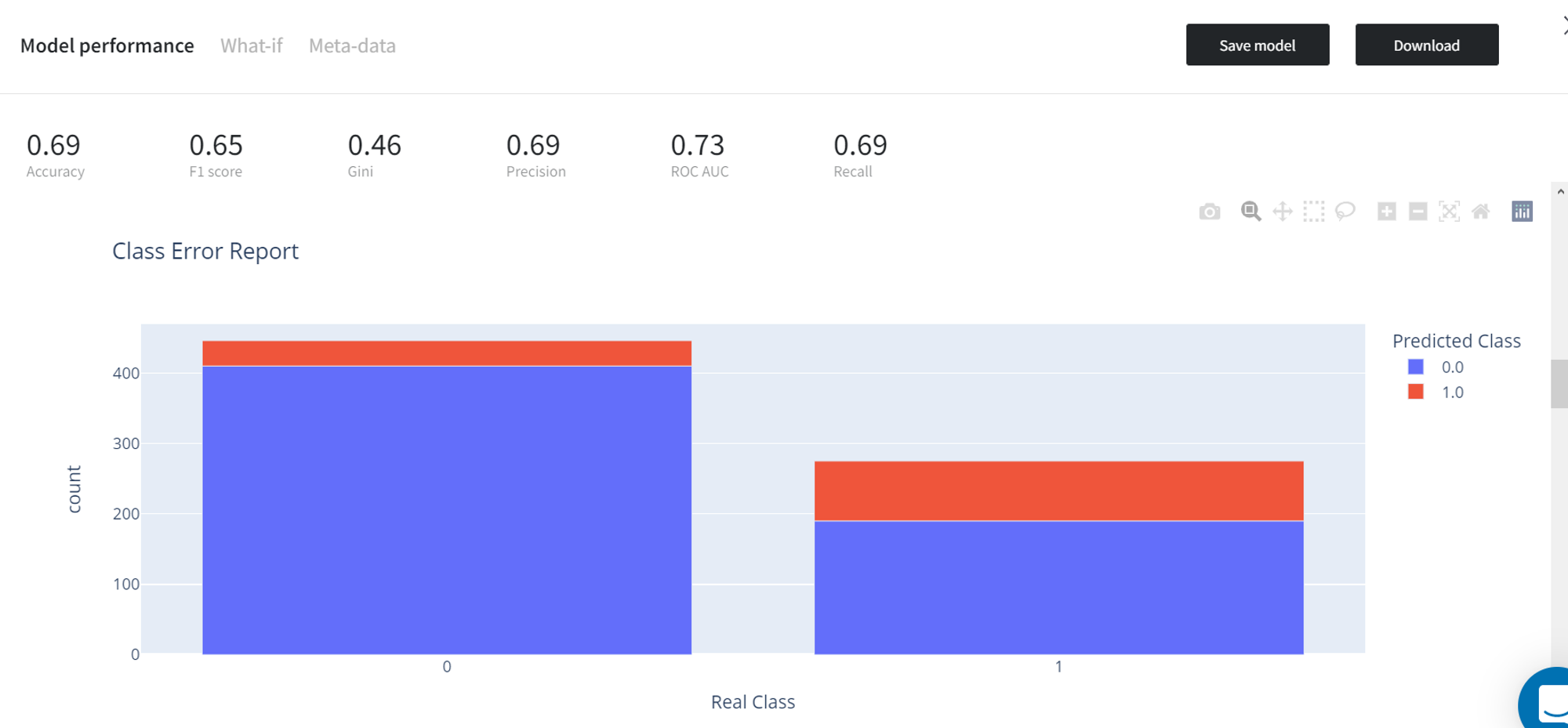

We use simple Logistic Regression to classify data and get following result. We notice that even for class 1 we get more predictions of 0 because of imbalance in initial data.

Let’s add Class Weighting brick to see what will change.

As a ‘Target variable’ we choose ‘survived’ and use ‘Auto Suggestion’.

As a result, we get the dataset with a column “normalized weight”.

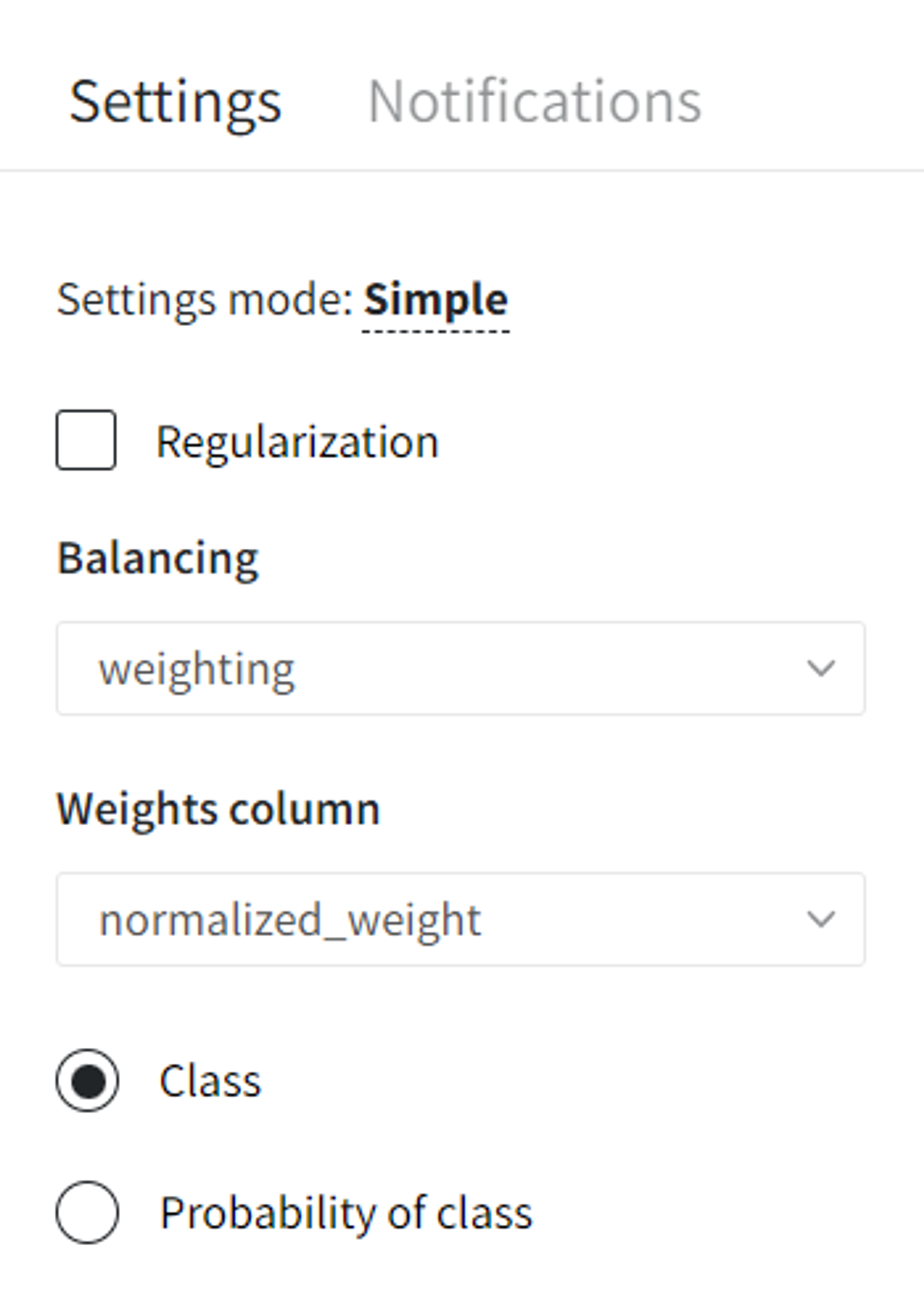

Then, to train our model on weighted dataset we choose in sidebar weighting as Balancing method and for Weights column choose our column with normalized weights.

As a result, we improved all the metrics and increased predicted values ‘1’ in the actual class ‘1’.